Software quality is no longer just about finding bugs—it’s about predicting, preventing, and optimizing testing at an unprecedented scale. With the rise of Artificial Intelligence (AI) in Quality Assurance (QA), traditional testing methods are evolving into intelligent, self-improving systems that enhance efficiency and accuracy. As applications grow more complex and development cycles shrink, AI-driven solutions are transforming test case generation, defect prediction, and automation strategies, allowing QA teams to focus on higher-value tasks. From streamlining manual test case creation with AI-powered tools integrated into Jira and Azure DevOps to enhancing chatbot test automation with machine learning models and boosting test script development with GitHub Copilot, AI in software testing is redefining how teams ensure software quality. This blog explores how these innovations are revolutionizing QA, setting new industry benchmarks, and shaping the future of software testing.

Revolutionizing Manual Test Case Generation with AI

Manual test case creation has long been a tedious and error-prone process, often leading to inconsistencies and gaps in test coverage. AI-powered test case generation is transforming this traditional approach, enabling QA teams to automate test design, enhance accuracy, and improve overall efficiency in AI in software testing.

One such tool making significant strides is AI Test Case Generator, which leverages AI to analyze user stories and automatically generate structured test cases. Each test case includes a unique ID, title, description, detailed steps, expected results, and priority level, ensuring comprehensive test coverage. Unlike manual methods, AI-driven generation minimizes human error and ensures consistency across test scenarios.

A key advantage of AI test case generation is its seamless integration with platforms like Atlassian Jira and Microsoft Azure DevOps. This integration allows QA teams to directly transform user stories into executable test cases, maintaining a clear traceability link between requirements and testing. Additionally, teams can organize AI-generated test cases under structured suites, such as regression testing, making it easier to track execution progress and maintain test artifacts over time.

By automating the labor-intensive aspects of test case creation, QA teams can focus more on exploratory testing, refining complex scenarios, and improving test coverage. As organizations strive for faster release cycles and higher software quality, AI-powered test case generation is proving to be a game-changer, enabling faster, more accurate, and scalable QA processes.

Enhancing Chatbot Test Automation with Machine Learning

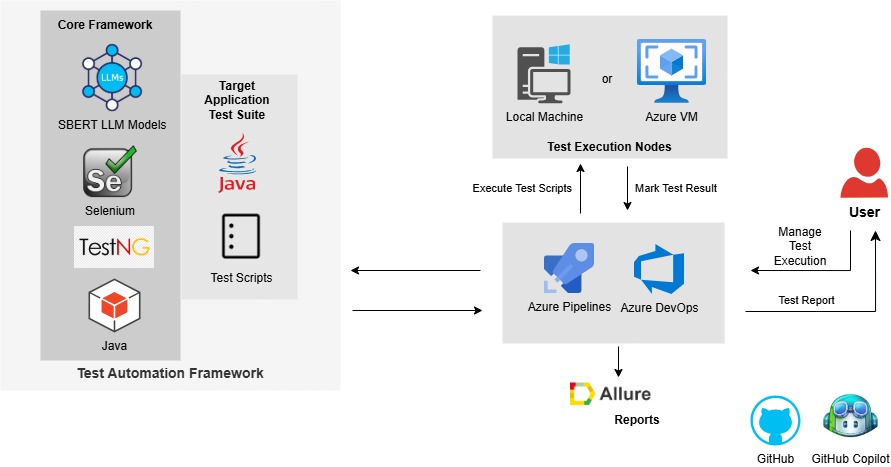

As chatbots become more integral to customer interactions, ensuring their accuracy and reliability is crucial. Traditional test automation methods struggle to validate chatbot responses due to the complexity of natural language processing. Machine learning-powered automation frameworks are revolutionizing chatbot testing by enabling intelligent, scalable, and repeatable validation of chatbot functionality.

One of the key advancements in this space is the integration of SBERT (Sentence-BERT) within test automation frameworks. SBERT is a specialized machine learning model designed for natural language processing, which modifies the BERT (Bidirectional Encoder Representations from Transformers) architecture to generate semantically meaningful sentence embeddings using siamese and triplet network structures. This makes SBERT particularly effective for evaluating the semantic similarity between expected and actual chatbot responses. The model computes cosine similarity between sentence embeddings, enabling precise assertion validation and ensuring that the chatbot’s output aligns with expected results. This AI-driven approach significantly reduces manual effort, improves response evaluation, and enhances overall test reliability.

The framework is designed specifically for chatbot testing, enabling seamless validation of the chatbot’s response accuracy against predefined regression scenarios and ensuring consistency with expected responses while excluding conversational context. Additionally, the use of GitHub Copilot for intelligent code suggestions ensures high-quality test script development. By automating the functional regression testing of chatbots, QA teams can shift their focus toward exploratory testing and refining complex chatbot behaviours. This machine learning-powered test automation framework not only improves chatbot accuracy but also ensures a more efficient and scalable QA process for AI-driven applications.

Boosting Test Automation Efficiency with GitHub Copilot

AI-driven tools like GitHub Copilot are transforming how QA teams approach test automation, making script development faster, more efficient, and highly optimized in AI in software testing. Copilot provides context-aware code suggestions for writing and maintaining automated test scripts, reducing the manual effort required for repetitive coding tasks. By assisting in test scenario creation, test data generation and assertions, Copilot enables QA engineers to develop optimized and maintainable automation frameworks with minimal effort. Additionally, its ability to optimize functions and suggest efficient approaches ensures that test scripts are not only accurate but also performant, leading to higher-quality test automation solutions.

One of the most significant advantages of GitHub Copilot is its seamless integration with IDEs, allowing QA engineers to receive real-time code suggestions tailored to their test automation framework. The Copilot Chat IDE Plugin further enhances this capability by offering interactive AI-driven coding assistance, making debugging and refining test scripts more intuitive. However, while Copilot accelerates test script development, QA teams must validate AI-generated code to ensure accuracy and relevance, particularly in UI automation and unique test case generation, where AI suggestions may sometimes be generic. Despite these limitations, GitHub Copilot is revolutionizing QA automation, enabling teams to focus on high-value testing activities, improve efficiency, and enhance overall software quality in an increasingly AI-driven development landscape.

Conclusion

AI-driven advancements are reshaping the landscape of software testing, empowering QA teams to automate complex processes, improve efficiency, and enhance software quality. From AI-powered test case generation that ensures comprehensive coverage and traceability, to machine learning-driven chatbot automation that delivers precise validation of conversational AI, and GitHub Copilot-assisted test automation that streamlines script development—these innovations are revolutionizing traditional QA methodologies. By leveraging AI in test automation, organizations can reduce manual effort, accelerate testing cycles, and ensure more reliable software releases. As AI continues to evolve, its role in QA automation will only expand, making it essential for teams to embrace these intelligent solutions to stay ahead in an increasingly fast-paced development environment.

About the Author

Sarthak Seth is a results-driven Technical Lead in Software Testing with over 10 years of experience in designing and implementing QA strategies and solutions for diverse clients. With a strong foundation in test automation, quality engineering, and AI-driven testing, he excels at developing innovative processes that align with emerging trends and industry best practices.